How to Run Isolated Tests Using Docker Compose on AWS

Nowadays, even the simplest application cannot be imagined without using data. For developers, this means writing complex systems for accessing data storage and information in them. For organizations that want to be data-driven, it is important to skillfully build such data operations and ensure their efficiency and reliability. In the following article, we will talk about how to ensure business logic accuracy by setting up a testing system.

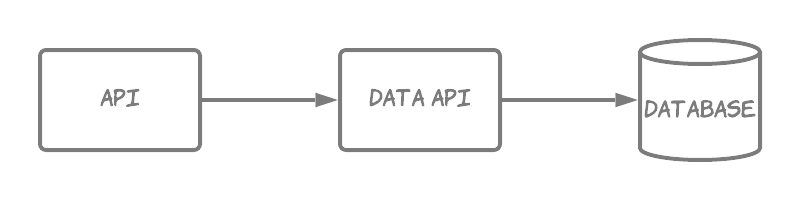

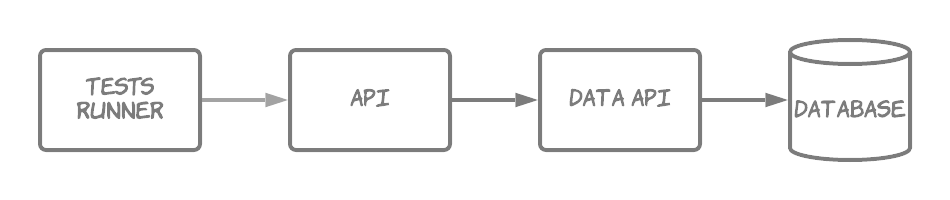

Let’s imagine you have the following setup in your project. A project API that communicates with Data API to get data from the database and forwards final results to the web application. We intentionally put Data API between API and database just to make the example a little bit more сomplex.

As you may have guessed, the goal is to cover project API with a set of integration tests that verify business logic and guarantee their stability. Tests should run independently for each new code change. An ideal case is to test the whole data flow from a database to API’s response, including communication via Data API. Of course, all the parts should have their own set of unit tests with mocked dependencies to ensure they work correctly. But the idea here is to check integration between all the components and be sure that the data flow is correct.

On top of that, we have a set of end-to-end tests that cover the UI part and what data is presented to the user, but we need more prompt feedback in case of broken business logic. Initially, we started with a set of smoke tests that were executed on a live environment and in the end cleaned-up all the test data. This approach worked perfectly to get the general health of the API, but it was hard to cover all required test cases and build a robust comprehensive test suite.

Moreover, we have to address some important points:

- The main business logic in APIs sits in SQL queries. It is hard to cover them with unit tests, therefore, the ideal solution for this case is a suite of functional integration tests.

- API work with the Vertica database, which is used for analytical solutions. The data there is prepared for reporting and doesn’t have individual row identifiers, like general OLTP databases. Also, it is not suitable for multiple updates or delete operations. Thus, we cannot execute tests on the same database and just rollback all transactions.

As a result, we came up with an idea to create a completely isolated test environment for our application. Long story short, the ideal candidate for this purpose is Docker Compose, those unfamiliar with it can read the great Overview of Docker Compose from the official docs. The Compose file defines all parts of our environment and fires it on your local machine. Then we can execute a suite of functional tests against it. This approach solves all the aforementioned challenges and allows automated test execution, including embedding it into the CI/CD process.

Local Docker Compose file

The local Compose file looks pretty straightforward. We define here all our components and settings for them.

version: '3'

services:

api:

image: "api:latest"

ports:

- "8080:80"

environment:

DataApiUrl: http://data-api/

db:

image: "vertica"

ports:

- "5433:5433"

data-api:

image: "data-api:latest"

ports:

- "8081:80"

environment:

DbConnection: "Driver=Vertica;Servername=db;Port=5433;UID=username;PWD=secret;Database=databasename;"

Then you should run the docker-compose up command, and the API will be available for testing at http://localhost:8080/. Next, you point local tests to this URL and execute them. The magic starts happening; you are running API tests against a real application locally without any connection to your infrastructure.

It looks simple, but what if you want to automate this test process, and let’s say run tests every night. This is not a problem, we can use AWS ECS CLI for this purpose. AWS has a great tutorial on how to run Docker Compose on ECS, but as always, there are some practical nuances and issues that happen in the real world. We will share our experience.

Adding a Tests Runner

During the previous step, we executed our integration tests locally, from IDE. Now we needed to run our tests on AWS, therefore we introduced a new component and called it Tests Runner. It is a simple Docker image where your tests will be executed. In our project, we use Groovy plus Spock to write integration tests, so our Tests Runner image uses Gradle to fire test execution using gradle test task. Actually, it is pretty easy to create a test runner for your project, you just need a Docker with required packages and some script that launch tests.

As a result, you can include your Docker Tests Runner into the Docker Compose file and deploy it together with other application components.

Creating a test database

A few notes on working with databases and how to create a test database instance. Overall, the steps are the following:

- Find a public Docker image for the database and create a custom image from it.

- Use shell scripts in the custom image to create a database and initialize its schema. We store database scripts for a schema definition in a separate GitHub repository and create a new image version as soon as a schema is updated, and publish it to our internal Docker registry.

- In the Test Runner we have a task, which waits for the database and starts test execution only when the database is up and running and a schema is created.

- Each test is responsible for its data preparation and cleanup. We have multiple insert scripts that fill database tables with required data.

Finally, you can reuse this approach to build multiple Docker images for each database type that you have. We use Vertica database, but it will work for all other database types, including MS SQL, PostgreSQL and even Amazon DynamoDB and Elasticsearch.

AWS ECS CLI process and files

AWS ECS CLI requires you to create two main files: a Compose file with a description of your application and an ECS parameters file with settings required to run your app on AWS ECS.

You can reuse your local Compose file and modify it for the ECS version. There are several steps that you should do:

- Add the logging part. It will create corresponding CloudWatch log groups and streams. This will allow you to read logs from application containers.

- Adjust ports. Ports should be the same in the compose file and container for awsvpc network mode. There is no way to overwrite ports as we did in local env, e.g., 8081:80 like we had for Data API. Therefore you should update your container to run API on 8081 and use 8081:8081 mapping.

- Replace service names with the localhost. AWS has a service discovery mechanism, but it is a little bit too complex for our needs, — this way we can go with a simple approach. You can find a detailed overview of service discovery here.

You can examine the final docker-compose-ecs.yaml file below. For additional details, read the official docs about supported Docker Compose syntax.

version: '3'

services:

api:

image: "api:latest"

ports:

- "80:80"

environment:

DataApiUrl: http://localhost:8081/

logging:

driver: awslogs

options:

awslogs-group: api-tests

awslogs-region: us-east-1

awslogs-stream-prefix: api

db:

image: "vertica"

ports:

- "5433:5433"

logging:

driver: awslogs

options:

awslogs-group: api-tests

awslogs-region: us-east-1

awslogs-stream-prefix: db

data-api:

image: "data-api:latest"

ports:

- "8081:8081"

environment:

DbConnection: "Driver=Vertica;Servername=localhost;Port=5433;UID=username;PWD=secret;Database=databasename;"

logging:

driver: awslogs

options:

awslogs-group: api-tests

awslogs-region: us-east-1

awslogs-stream-prefix: data-api

runner:

image: "runner:latest"

environment:

ApiUrl: http://localhost:80/

logging:

driver: awslogs

options:

awslogs-group: tests-runner

awslogs-region: us-east-1

awslogs-stream-prefix: runner

The ecs-params.yamldefines additional parameters related to the AWS ECS configuration. You can find more details here Using Amazon ECS Parameters — Amazon Elastic Container Service.

Please note that the following example has placeholders for TaskExecutionRole, Subnet and SecurityGroup parameters. Adjust them to correspond to your infrastructure.

version: 1

task_definition:

services:

api:

cpu_shares: 256

mem_limit: 2G

mem_reservation: 2G

data-api:

cpu_shares: 256

mem_limit: 2G

mem_reservation: 2G

db:

cpu_shares: 2048

mem_limit: 4G

mem_reservation: 4G

runner:

cpu_shares: 512

mem_limit: 2G

mem_reservation: 2G

task_execution_role: #{TaskExecutionRole}

ecs_network_mode: awsvpc

task_size:

mem_limit: 16GB

cpu_limit: 4096

run_params:

network_configuration:

awsvpc_configuration:

subnets:

- #{Subnet}

security_groups:

- #{SecurityGroup}

assign_public_ip: DISABLED

Launch integration tests using AWS ECS tasks

Now we have everything to run your tests in AWS ECS, and we will use Fargate for this purpose.

First of all, you have to install and configure ECS CLI. After this, you can work with AWS ECS.

The first step is to configure the ECS cluster via ecs-cli configure command, where you define clustername and config-name parameters.

ecs-cli configure --cluster integration-tests --default-launch-type FARGATE --config-name integration-tests --region us-east-1

Then you can start the real AWS ECS test cluster using ecs-cli up command for defined network details like vpc, subnetsandsecurity-group.

ecs-cli up --cluster-config integration-tests --vpc vpc-id --subnets subnet-ids --security-group security-group-id --force

Now the cluster is created and you are ready to launch the integration tests in it. The ecs-cli compose up command will create an ECS task with integration tests, as well as all required infrastructure defined in the Compose file and ECS settings file. Just one note here, you should provide the IAM role for the test task. It should be created beforehand and have access to all required resources.

ecs-cli compose --file docker-compose-ecs.yaml --ecs-params ecs-settings.yaml --project-name integration-tests --task-role-arn taskRoleArn up --create-log-groups --cluster-config integration-tests

ECS will then allocate all the needed resources for your services, and all containers from the Compose file will be running simultaneously. In our case, we will execute functional integration tests against a real database instance and two APIs, which is really awesome!

Finally, you can script all the previous commands and integrate test execution into your CI/CD process to improve your delivery pipeline.

This is not the end

We hope our example with isolated integration tests illustrated the power of running Docker Compose files on AWS infrastructure. This opens a lot of new possibilities in tasks automation and running complex processes on top of the AWS cloud right from your laptop or CI/CD tool.

Moreover, Docker and AWS continue theirpartnership to provide native ECS integration to Docker compose, which looks very promising and exciting, and is definitely worth a try.

If you’re looking for more specific case studies and detailed engineering how-tos, you can find them here. Interested in partnering with a professional team? Check out the GreenM services page.

WANT TO KNOW HOW TO BUILD AWS SAAS PLATFORM FROM SCRATCH?

See how to improve and adapt technologies in order to scale the system from 0 to 10K users during a rapid customer growth with the possibility of a quick onboarding.