How to use a local ChatGPT in healthcare securely?

Intro

Everybody uses ChatGPT, but not everyone can do so due to security, credibility, and other concerns in their domain, especially in healthcare when it comes to sensitive patient data.

On the one hand, the integration of AI-driven tools like ChatGPT already shapes patient experience, and personalized care, streamlines administrative processes, and provides valuable support to healthcare professionals. On the other hand, there are the said worries about using it.

Interesting figures: According to the NCBI’s report, most participants (75.1%) were comfortable with incorporating ChatGPT into their healthcare practice. Nevertheless, concerns about credibility and the source of information provided by AI Chatbots (46.9%) were identified as the main barriers. According to Malwarebytes, 81% were concerned about possible security and safety risks. 63% don’t trust the information it produces.

So, this trouble exists, as the desire to use. Though, imagine if you can use ChatGPT freely, safely, and reliably. Sounds unbelievable? But it is real. In this article, we will discuss how you can make healthcare data operations ethically and securely while using this AI tool.

Evolve in your case with our AI Transformation service. We have a secure solution!

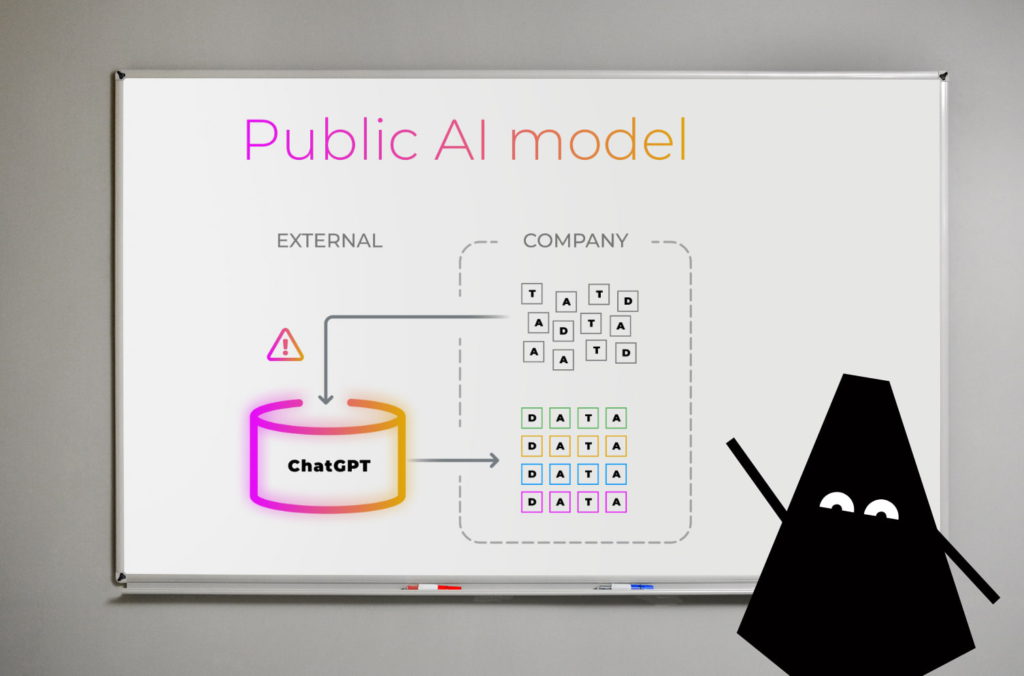

How the public AI model works

For starters, let’s figure out how it is made. At its core, ChatGPT is a large language model that processes and generates text based on the input it receives. It’s trained on vast datasets encompassing a wide range of topics, enabling it to understand and respond to various queries.

Public AI models are typically hosted on cloud platforms, which poses security and privacy risks. Here is the thing: in a public AI model, data is sent from the company to an external ChatGPT service, which processes the information and returns results. This can expose sensitive data to potential security risks.

Risks of using not a private Chat GPT

At this point, let’s speak more about the risks of AI adoption in healthcare, i.e. risks inherent in public AI models and why people doubt using it in their job. As mentioned above, the main risks are about credibility, security, and ethical issues.

Credibility risks

Public AI models, while powerful, are trained on vast and varied datasets that may include outdated or incorrect information. For instance, ChatGPT 3.5 models are trained on knowledge through September 2021, and for ChatGPT 4 the knowledge cutoff date is April 2023. Plus, based on that data, the output may not be client-specific.

But in the healthcare context, precision and accuracy are critical. This can lead, for example, to the dissemination of misleading or incorrect medical advice.

- Outdated information: Public models may provide recommendations based on old medical guidelines or research, potentially compromising patient care.

- Source uncertainty: The sources of information used to train public models are often not transparent, making it difficult for healthcare professionals to verify the reliability of the information provided.

Ethical concerns

The ethical implications of using public AI in healthcare are profound:

Bias and fairness: Public AI models may inherit biases present in their training data, leading to unfair or biased output. This can particularly affect vulnerable populations and exacerbate healthcare disparities.

Accountability: Determining accountability for decisions made based on AI recommendations is complex. Public AI services do not provide clear mechanisms for tracing decision paths or holding entities accountable for errors.

Security risks

Security is a paramount concern when using AI in healthcare due to the sensitive nature of patient data:

- Data breaches: Sending patient data to an external ChatGPT service increases the risk of data breaches. Any intercepted data can lead to serious privacy violations.

- Regulatory compliance: Healthcare organizations must comply with strict regulations like HIPAA and GDPR. Using a public AI service can complicate compliance, especially if the service provider’s data handling practices are not transparent or do not meet regulatory standards.

- Unauthorized access: Public AI models hosted on cloud platforms can be vulnerable to unauthorized access. Without robust security measures, there’s a risk that sensitive patient data could be accessed by malicious actors.

Erasing security risks with a private ChatGPT

Regardless of AI’s impact on healthcare data security, this vector can be refocused in the right direction. To mitigate these risks, hosting a local version of ChatGPT within the secure infrastructure of a healthcare organization is essential. Here is why:

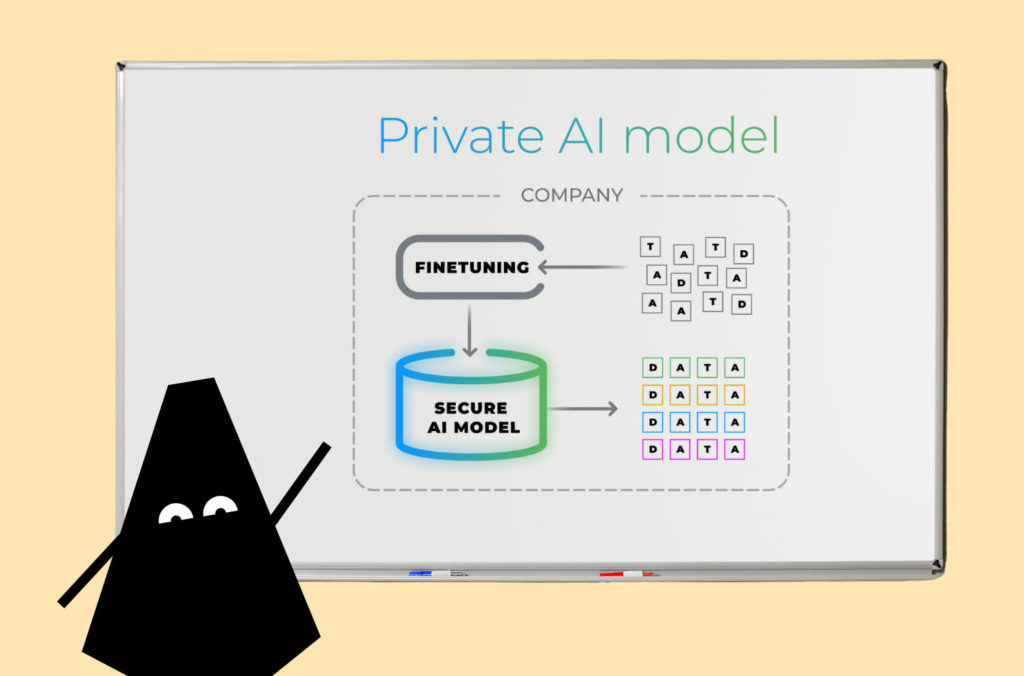

To save your time, the main point is that by launching your own ChatGPT locally in your infrastructure, data never goes outside and stays safe within the company. But if you want the details of this secure solution, here they are:

Data privacy and control

- Internal data handling: All data used for training, fine-tuning, and inference stays within the company’s boundaries. This setup prevents sensitive data from being transmitted to external servers or third-party cloud providers, minimizing the risk of data breaches and unauthorized access.

- Confidentiality: By keeping the data and AI model on-premises or within a private cloud, the company maintains full control over who can access the data and under what conditions. This ensures that only authorized personnel have access to sensitive patient information, thereby maintaining confidentiality.

Regulatory compliance

Compliance with regulations: Many industries, especially healthcare, are subject to strict data protection regulations such as HIPAA in the United States and GDPR in Europe. A private AI model can be configured to comply with these regulations by implementing necessary safeguards, such as data encryption, access controls, and audit trails. This helps ensure that all data-handling processes meet legal and ethical standards.

Enhanced security measures

- Robust security infrastructure: Hosting the AI model within a controlled environment allows the company to implement robust security measures, including firewalls, intrusion detection systems, and regular security audits. This reduces the vulnerability to cyber-attacks compared to public cloud solutions, which may have broader attack surfaces.

- Data encryption: Both in-transit and at-rest encryption can be employed to protect sensitive data. This ensures that even if data is intercepted or accessed without authorization, it cannot be easily deciphered or misused.

Customizable and specialized security protocols

- Tailored security protocols: Unlike public AI models, which offer generic security features, a private AI model allows for the customization of security protocols to meet specific organizational needs. This includes implementing specialized authentication methods, detailed logging, and monitoring tailored to the unique requirements of the organization.

- Fine-grained access control: The organization can define fine-grained access control policies, restricting data and model access based on roles and responsibilities. This minimizes the risk of internal threats and ensures that only individuals with the necessary clearance can access sensitive information.

Reduced third-party risk

Minimized dependency on third parties: By maintaining the AI model and data within the organization, there is reduced reliance on third-party vendors or external services. This decreases the potential risks associated with third-party data handling practices, such as insufficient security measures or non-compliance with data protection regulations.

Transparency and accountability

- Transparency in data use: A private AI model allows for full transparency in how data is used and processed. The organization can clearly document data flows, data storage, and data processing activities, providing clear accountability and traceability.

- Auditing and monitoring: The organization can implement comprehensive auditing and monitoring systems to track data access and modifications. This helps identify and respond to security incidents promptly, thereby enhancing overall data governance.

Reducing credibility risks to zero with fine-tuning and data prep

Tailored model training and fine-tuning

Unlike public models, a private ChatGPT can be specifically fine-tuned using curated datasets relevant to the healthcare provider’s specific domain. This customization enhances the accuracy and relevance of the AI’s outputs, which is crucial in medical settings where precision is paramount.

By using proprietary data for fine-tuning, healthcare organizations can ensure that the AI provides recommendations or other responses that are consistent with the latest medical guidelines and the specific needs of their patients.

- Up-to-date information: Fine-tuning the model on the latest medical research and guidelines ensures that the AI’s recommendations are based on the most current knowledge, thereby avoiding the pitfalls of outdated information.

- Domain-specific training: By training the model on data specific to the organization’s practice area, the AI can provide more accurate and contextually relevant responses, enhancing its credibility among healthcare professionals.

Data preparation and validation

In the preparation phase, the data used to fine-tune the private AI model undergoes rigorous validation and cleaning to ensure its quality and relevance. So, to boost AI effectiveness, preparing data is crucial here. This step addresses several credibility issues:

- Accuracy: Ensuring that the data is accurate and up-to-date mitigates the risk of the AI providing incorrect or misleading information.

- Source transparency: By using well-documented and trusted sources for training data, healthcare organizations can verify the reliability of the AI’s recommendations, building trust among users.

- Bias mitigation: Careful selection and preprocessing of training data help in identifying and mitigating potential biases, ensuring that the AI’s outputs are fair and unbiased.

Ethical issues are also neutralized

By preparing and curating training datasets, healthcare organizations can ensure that the AI model reflects ethical principles, such as fairness and impartiality. Fine-tuning allows for the incorporation of ethical guidelines and sensitivity to diverse patient populations, thereby promoting equity in healthcare delivery.

By maintaining rigorous oversight and ethical scrutiny throughout the development and implementation of a local ChatGPT model, healthcare providers can build trust with patients and professionals, demonstrating a commitment to ethical standards and patient rights.

Plus, by hosting the AI model locally, healthcare organizations maintain full control over data management and usage, ensuring that patient information is handled in compliance with ethical standards and regulatory requirements.

Conclusion: Local Chat GPT makes the difference

So, if you have noticed, the answer lies in the article title. To address security and other concerns related to using ChatGPT, you need to have a local ChatGPT installed in your infrastructure and get it fine-tuned to obtain the most relevant and reliable output.

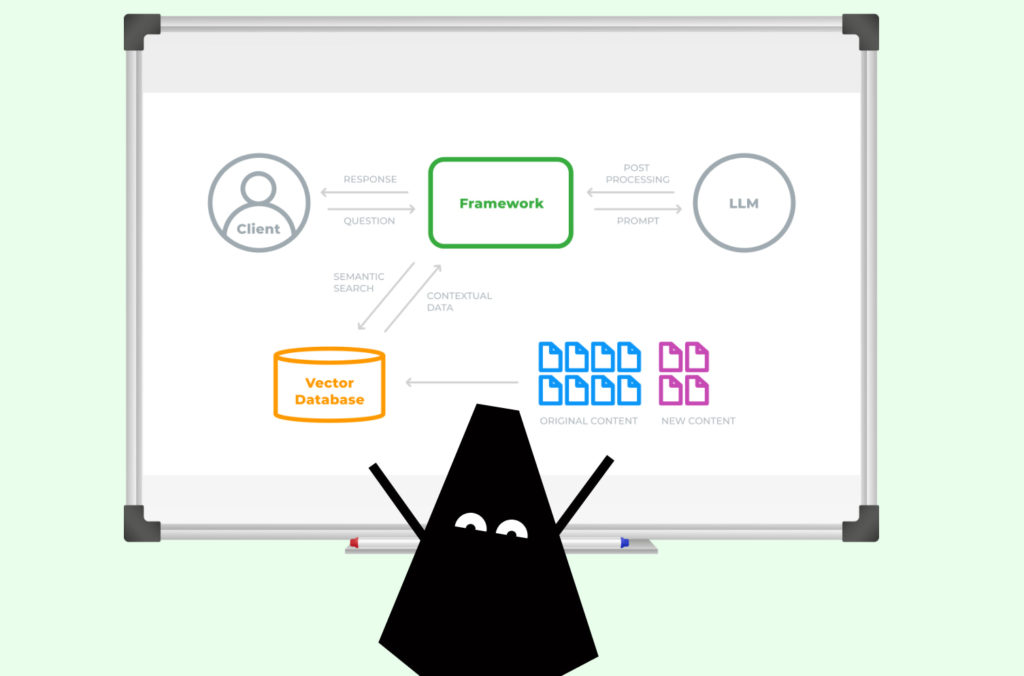

To that end, we have delivered two projects: one is about developing a local AI chatbot, and the other one is about deploying a private LLM. And of course, we have completed a bunch of cases regarding improving patient experience, healthcare sales process, clinical study synchronization, and more.

Get your private medical chat GPT and be sure your patient data is safe

FAQ

If I want this local ChatGPT, what can you tell about its quality and price?

The most pleasant benefit thereof is cost-effectiveness. After comparing different LLMs and ChatGPT-4o, 3.5, we have picked LLM which is the best price-quality option. It is much cheaper, and the output quality is nearly the same as that of GPT-4o. We reached the following figures: 0.00586 USD as the price for processing 1,000 tokens, 382 score points for response quality compared to USD 0.01 for ChatGPT-4o.

What services does GreenM offer to support the operationalization of these AI models?

We provide a comprehensive service where our engineers handle the entire operationalization process. This includes installing AI models locally, integrating them into the client’s infrastructure and applications, and working closely with clients’ teams to ensure a smooth connection and knowledge transfer. Our engineers are available in both on-shore and off-shore locations to ensure 24/7 coverage of business operations. This support is crucial for organizations needing seamless integration and continuous support.

How does GreenM ensure compliance with regulations like HIPAA when installing these models locally?

Compliance is integrated into every step of our process. We conduct thorough audits and implement strict access controls to ensure that all data handling meets regulatory standards. By keeping data on-premises, we minimize risks associated with data transfer and external storage, making it easier for healthcare organizations to maintain compliance. This is essential for anyone focused on regulatory adherence and data security.