Value delivery

Working on the project, our team achieved the following results:

1

Implementing the plugin to support configurations of existing data tasks in Airflow

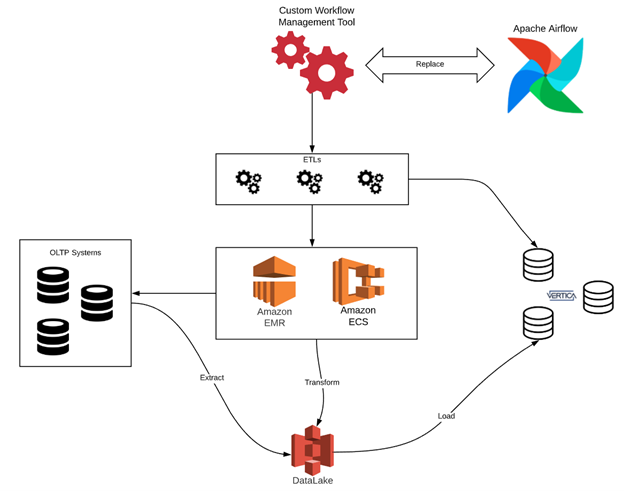

It was critical to maintain the configuration of the existing data tasks in the new Airflow system. To do this, it wasn’t enough to simply implement Airflow, it was necessary to expand the functionality of the framework. Our team has implemented custom Airflow operators, so that they can read data pipeline steps and translate them into Airflow steps. As a result, this custom Airflow plugin allowed us not only to migrate existing sub-pipelines with minimal changes but also to increase the overall readability of the system.

2

Automating the manual restart operation

The existing workflow orchestration system didn’t have an automated restart feature in the event of any trouble or shutdown. As everything had to be done manually, engineers spent a lot of time on this. Our team decided to develop a new Airflow feature for custom operators and implemented an auto-restart mechanism with a configured number of retries. From now on, in the event of any unforeseen issues or delays, the system automatically continues the execution of the data pipeline.

3

Improving maintainability and troubleshooting

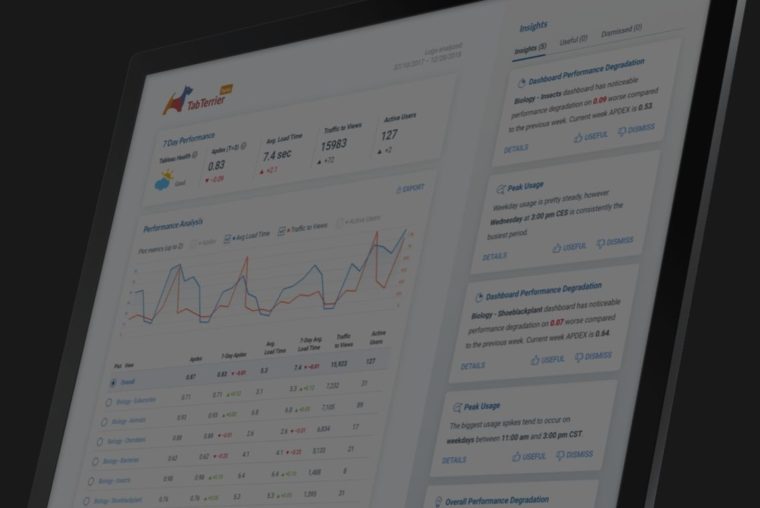

Our team added structured logs streamed to AWS, which allowed us to configure alerts, subscriptions, statistic dashboards based on logs, and improve overall monitoring.

4

Improving audit logging

By adding additional user information to the logs, our team managed to increase the overall security of the system.